Generative AI models, such as OpenAI’s GPT-4 or Stability AI’s Stable Diffusion, are notable for their ability to generate new text, code, images, and videos. However, training these models requires a huge amount of data, and developers are facing data scarcity and even the risk of running out of training resources.

Given this shortage, using synthetic data to train future generations of AI models could be an attractive option for large tech companies. Not only is synthetic data generated by AI cheaper than real data, it is also virtually unlimited in quantity. It also reduces privacy risks (for example, in the case of medical data), and in some cases, synthetic data can even improve AI performance.

Consequences of training with synthetic data

However, recent research from the Digital Signal Processing group at Rice University suggests that the continued use of synthetic data could have significant negative impacts on future generative AI models.

“The problem arises when this training with synthetic data is repeated over and over again, creating a self-consuming feedback loop – what we call an autophagy or ‘self-eating’ loop,” explains Dr. Richard Baraniuk, Professor of Electrical and Computer Engineering at Rice University. “When a model is repeatedly trained on synthetic data without new data from the real world, the quality of the model deteriorates, eventually leading to a phenomenon called ‘model collapse.’”

This phenomenon is similar to mad cow disease, a serious neurodegenerative disease in cattle that can be transmitted to humans through eating infected beef. The disease broke out in the 1980s and 1990s due to the practice of feeding cattle food made from the remains of slaughtered cows, from which the term “autophagy” was coined, from the Greek words auto- meaning “self”, and phagy meaning “to eat”.

Consequences and future of generative AI

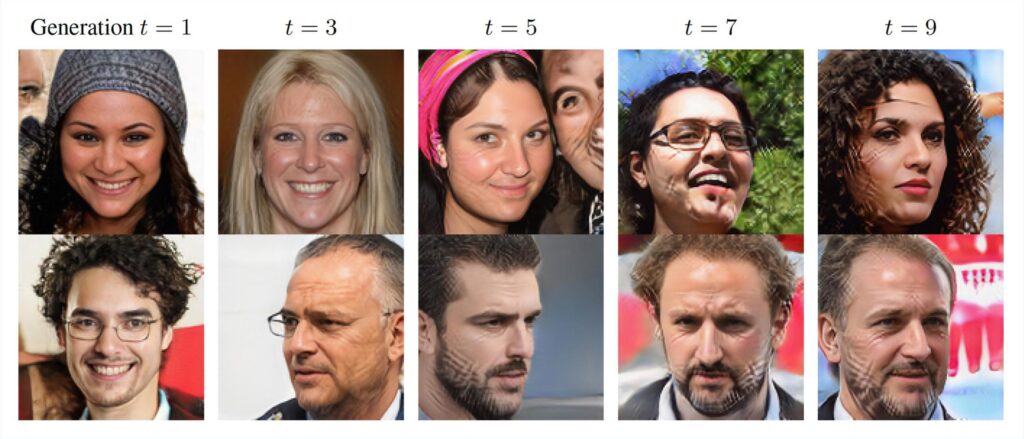

Research shows that, over successive generations of models trained on synthetic data without enough new real data, the models produce results that are increasingly distorted, lacking quality or variety. In other words, the more new real data, the better the AI performs.

Dr. Baraniuk and his team studied three variations of self-consuming training loops to provide a more realistic view of how real and synthetic data are combined in training datasets:

- Fully generative loop : Successive generations of models are trained on a diet of fully generative data from the outputs of previous generations.

- Synthetic reinforcement loop : The training dataset for each generation of the model consists of a combination of synthetic data from previous generations and a fixed set of real training data.

- New data loop : Each generation of the model is trained on a combination of synthetic data from previous generations and a new real data set.

In these scenarios, the lack of new real-world data leads to a serious decline in the quality and diversity of outputs. If this continues, over time, AI models will become increasingly inaccurate and less diverse, with unintended consequences that could even affect the quality and diversity of the entire data on the Internet.

To make these simulations more realistic, the researchers added a sampling bias parameter to simulate the tendency of users to prioritize quality data over diversity. While this can maintain data quality across multiple model generations, it reduces diversity, leading to the risk that AI models will become less efficient and diverse over time.

A worst case scenario is that if left unchecked, the “MAD” phenomenon could pollute the entire data on the Internet, reducing both the quality and diversity of the data.

Note:

- Model Autophagy Disorder (MAD) : The phenomenon where an AI model consumes its own quality through continuous training on synthetic data without enough new real data.

- Synthetic data : Data generated by AI, not from real-world sources, is often used to train AI models.

- Selection bias parameter : The tendency to prioritize high-quality data over variety, leading to a decrease in AI model diversity.

Original report: “Self-Consuming Generative Models Go MAD” by Sina Alemohammad, Josue Casco-Rodriguez, Lorenzo Luzi, Ahmed Imtiaz Humayun, Hossein Babaei, Daniel LeJeune, Ali Siahkoohi and Richard Baraniuk, 8 May 2024, International Conference on Learning Representations (ICLR), 2024. DOI: 10.1001/jamanetworkopen.2024.22749